Understanding Occam's Razor in Machine Learning: A Simplified Approach

Written on

Chapter 1: The Essence of Occam's Razor

Occam's Razor is a philosophical principle that asserts that among competing hypotheses, the one with the fewest assumptions should be selected. This concept resonates deeply within the realm of machine learning.

"Everything should be made as simple as possible, but not simpler."

— Albert Einstein

Previously, I discussed the No Free Lunch Theorem, which posits that no single machine learning algorithm excels universally across all scenarios. Similarly, Occam’s Razor, rooted in philosophical thought, suggests that simpler explanations or models are generally preferable.

Section 1.1: Philosophical Foundations

The origins of Occam's Razor can be traced back to ancient philosophers. Aristotle (384–322 BC) hinted at its essence when he suggested that simpler demonstrations are often superior if all else remains constant. Ptolemy (c. AD 90 — c. 168) echoed this sentiment with his principle advocating for the simplest possible hypothesis.

It was not until the 14th century that William of Ockham articulated the phrase, “entities must not be multiplied beyond necessity,” solidifying the concept of Occam's Razor. Essentially, this principle endorses simpler solutions when confronted with multiple viable options.

Subsection 1.1.1: Probability Theory Justification

Occam's Razor can be justified through basic probability theory. More complex models typically rely on numerous assumptions. The more assumptions introduced, the higher the likelihood of one being incorrect. If an assumption does not enhance the model's accuracy, it merely escalates the risk of the entire model being flawed.

For instance, to explain the blue color of the sky, a straightforward explanation rooted in light properties is more credible than an elaborate theory involving extraterrestrials scattering blue dust in the atmosphere. The latter's complexity, laden with unverified assumptions, increases its chances of being incorrect.

Section 1.2: Practical Implications in Machine Learning

Occam’s Razor, while seemingly straightforward, has significant implications in machine learning. In 1996, Pedro Domingos introduced “Occam’s Two Razors” to clarify this principle within the field:

- When two models exhibit the same generalization error, select the simpler one, as simplicity is inherently valuable.

- If two models show identical training errors, the simpler one is preferred, as it is likely to demonstrate superior generalization.

Domingos emphasized that while the first razor holds true, the second one may not always apply. Decisions made based solely on a model's training performance can lead to poor outcomes.

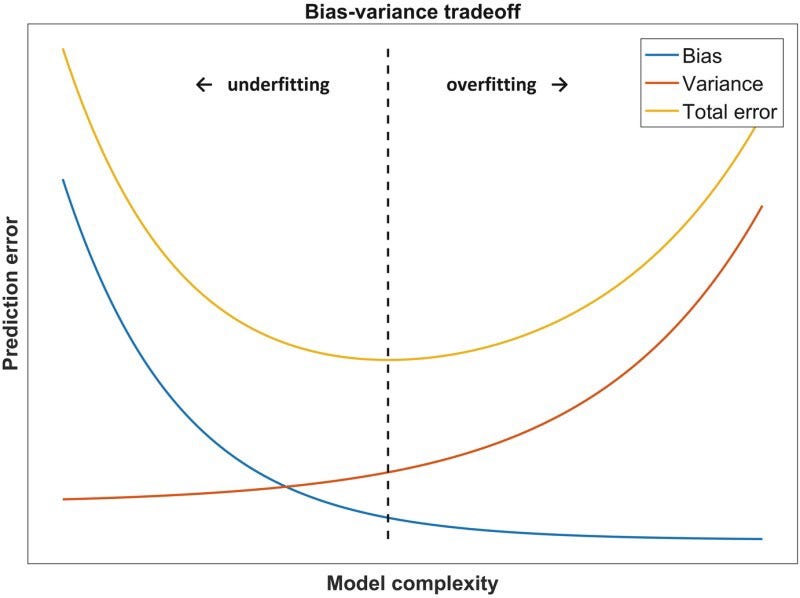

As illustrated above, Occam’s Razor represents a fundamental optimization challenge in machine learning. When selecting a model, the goal is to find one that balances complexity to avoid underfitting while remaining simple enough to prevent overfitting.

Chapter 2: Misinterpretations and Practical Considerations

Occam’s Razor should not be misconstrued to suggest that simpler models are always superior. This assumption contradicts both the premise of Occam’s first razor and the No Free Lunch Theorem, which asserts that no single algorithm is universally ideal across all tasks. A simpler model can only be deemed better if its generalization error is equal to or lower than that of a more complex counterpart.

While Occam's Razor applies when a simpler model has equal or better generalization error, there are scenarios where a simpler model is chosen even if it performs worse. Simpler models can offer advantages such as:

- Reduced memory consumption.

- Quicker inference times.

- Enhanced explainability.

Consider a fraud detection scenario where a decision tree achieves 98% accuracy, while a neural network reaches 99%. If the application demands rapid inference and limited server memory, along with the necessity for clear explanations to regulators, the decision tree would likely be the superior choice. Unless the 1% accuracy difference is critical, opting for the decision tree aligns better with the overall requirements.

Summary

In summary, Occam’s Razor is a philosophical concept that holds relevance in machine learning. It posits that when all factors are equal, a simpler model should be favored over a more complex one. However, this does not imply that simpler models are always better; rather, they must possess sufficient complexity to capture dataset patterns while being uncomplicated enough to avoid overfitting.

To effectively implement Occam's Razor, one should always compare the generalization errors of various models and account for the practical demands of the problem at hand before selecting a simpler model.

Sources

- Duignan, Occam’s razor, (2021), Brittanica.

- Domingos, Ockham’s Two Razors: The Sharp and the Blunt, (1998), KDD’98: Proceedings of the Fourth International Conference on Knowledge Discovery and Data Mining.

Join my Mailing List

Subscribe to my mailing list for updates on my data science content. Upon signing up, you will also receive my free Step-By-Step Guide to Solving Machine Learning Problems! Follow me on Twitter for content updates, and consider joining the Medium community to explore articles from countless other writers.