Enhancing LLM Reasoning: The DiVeRSe Approach Unveiled

Written on

Chapter 1: Understanding LLM Reasoning Limitations

Large Language Models (LLMs) have shown proficiency in various tasks, such as natural language processing, question answering, and text generation. However, they often face challenges with reasoning tasks including arithmetic, commonsense understanding, and inductive reasoning.

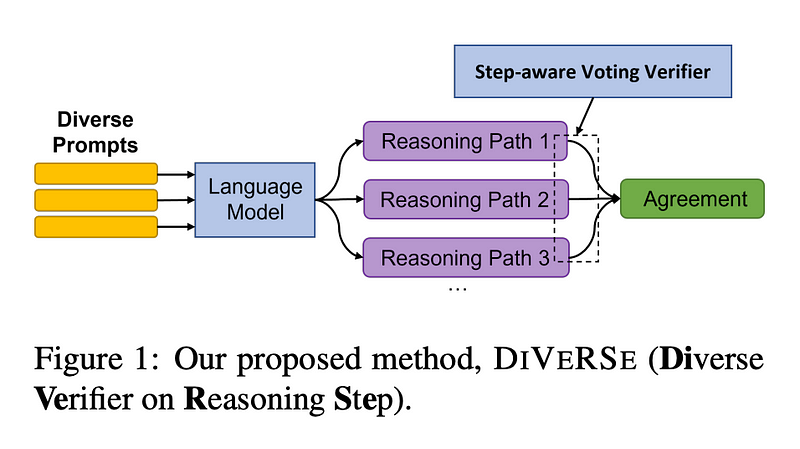

In a recent study, researchers introduced a novel method known as the Diverse Verifier on Reasoning Steps (DiVeRSe) aimed at enhancing the reasoning capabilities of LLMs. DiVeRSe comprises three critical components:

Diverse Prompts

DiVeRSe generates a variety of prompts that enable exploration of multiple reasoning paths for a specific question. This approach ensures that the LLM evaluates all potential solutions rather than defaulting to the first one it considers.

Voting Verifier

The method employs a verifier designed to filter out incorrect responses through a weighted voting mechanism. This verifier is a dedicated language model trained to distinguish between accurate and inaccurate answers.

Step-Aware Voting

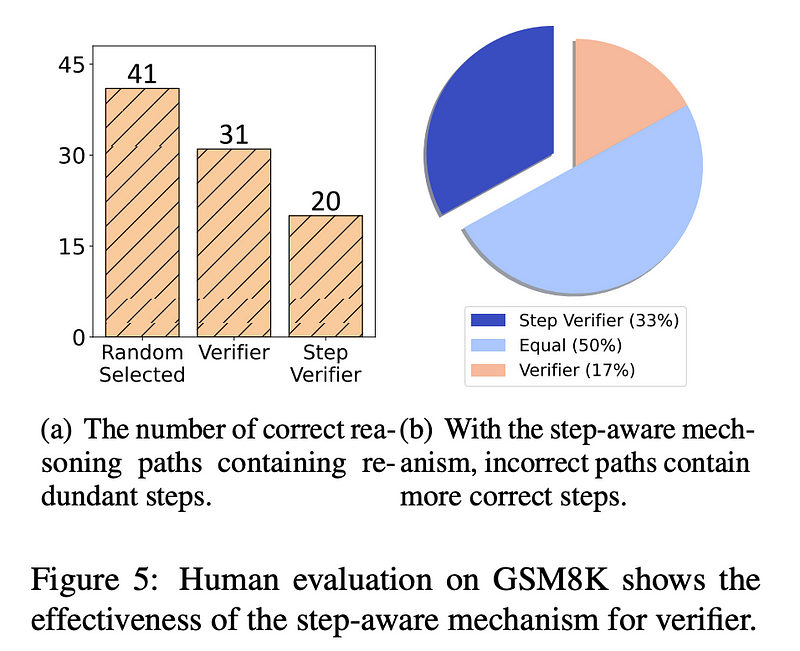

DiVeRSe incorporates a step-aware voting verifier that evaluates each reasoning step independently, rather than assessing the entire reasoning pathway at once. This strategy helps identify instances where the LLM may arrive at the correct conclusion at some stages while faltering at others.

Chapter 2: Practical Application of DiVeRSe

The step-aware verifier can effectively evaluate the accuracy of each reasoning step throughout a pathway. As illustrated, the scores for the correct reasoning path remain high, while those for the incorrect path begin to decline at the point where errors occur. This indicates that the step-aware verifier not only boosts the model's performance but also enhances interpretability by revealing the correctness of each reasoning step.

The first video titled "What's Next in LLM Reasoning?" with Roland Memisevic discusses the advancements in LLM reasoning techniques and future prospects.

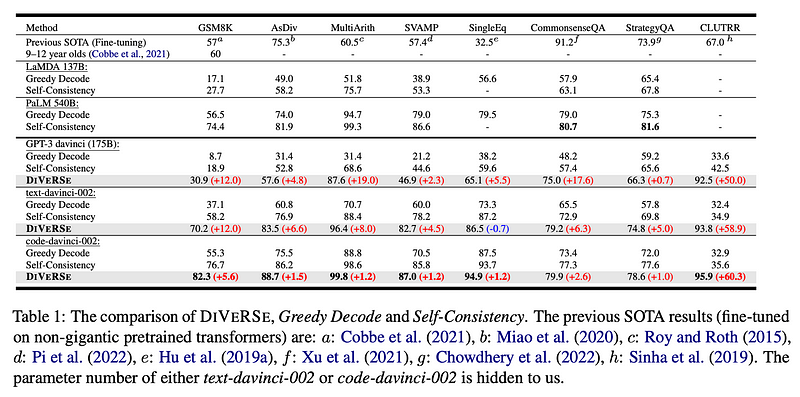

Evaluation of DiVeRSe

Researchers assessed DiVeRSe across various reasoning tasks and observed consistent improvements over baseline methods. Notably, in arithmetic reasoning, DiVeRSe achieved an impressive 95% accuracy, significantly surpassing the 85% accuracy of the baseline approaches.

Conclusion

The findings of this research indicate that DiVeRSe represents a significant advancement in enhancing the reasoning capabilities of language models. Its straightforward and effective design allows for easy implementation across diverse applications, including educational tools, chatbots, and various other systems.

How might DiVeRSe be leveraged to enhance AI reasoning capabilities in future developments?

The second video titled "5 Easy Ways to Help LLMs to Reason" explores practical strategies for improving LLM reasoning abilities.

All images (figures and tables) are sourced from the DiVeRSe paper. Happy learning!

You can stay updated on technology and AI developments on Medium by subscribing to a Premium Account through this link; your support is greatly appreciated.